Resource Centers

R&R Analysis for Attribute Measurements

Attribute Measures

- Attribute measures can range from objective GO/NOGO dimensional gages to fairly subjective cosmetic sorting measures.

- Techniques for evaluating attribute measurement systems are not as statistically based as are analysis techniques for evaluating variable measurement systems.

Techniques for Evaluating Attribute Measurements

- “Seeding the Sort”

- The Signal Detection Approach

- The Effectiveness Method

Notes:

- All techniques should use at least 20 parts or samples with each checked at least two or three times by at least two appraisers; more is better.

- Some of the parts should be slightly above the upper spec; some should be slightly below the lower spec.

- Samples analyzed should be randomized before testing to prevent bias. Randomize before each testing cycle.

“Seeding the Sort”

- “Bad” parts or samples are fed to the measurement system anonymously.

- These bad parts must be identifiable to the team conducting the MSA, but not to the appraisers.

- If bad parts get through or good parts get rejected, the attribute measurement system should be improved.

The Signal Detection Approach

- Collect parts or samples representing the range of the process.

- For high capability processes, some parts should be made for the measurement system analysis that are out of specification and also some close to the tolerance limits.

- Measure these parts to obtain reference values.

- Submit the parts to the measurement system being studied.

- Use two or more appraisers.

- Each part should go through the measurement system at least 3 times.

- Identify parts as good or bad and correctly or incorrectly measured.

- Set the parts up in descending reference values.

- Assign each part to one of three regions:

- Region I is for “Bad” parts identifed correctly as “Bad” by all appraisers.

- Region II is for the “Gray Area” where good parts are sometimes identified as bad and bad parts sometimes identifed as good.

- Region III is for “Good” parts identifed correctly as “Good” by all appraisers.

- Calculate the spread of Region II, d.

![]()

- dUSL = last reference value outside the upper spec with all parts identified as bad first reference value inside the upper spec with all parts identified as good.

- dLSL = last reference value inside the lower spec with all parts identified as good first reference value outside the lower spec with all parts identified as bad.

- If you are only evaluating a one-sided tolerance, use only dUSL or dLSL to define the spread of the region that the measurement system has problems in.

Estimate R&R

- AIAG allows the use of 6s to cover 99.7% of the variation, instead of the historical 5.15s, which covers 99% of the variation. This is at the discretion of the organization.

![]()

- Calculate %GR&RTV.

![]()

- The total variation could be determined from the parts used in the study (using the sample standard deviation of the reference values).

- If the process capability is high so that out-of-spec parts had to be generated for the measurement system study, then do no use the sample standard deviation of all of the parts in the measurement system study. Instead, use the historical process standard deviation in the calculation for TV.

- Calculate %GR&RTolerance

![]()

- Determine if the measurement system is acceptable or needs to be improved.

- For an attribute measurement system, the acceptability of the measurement system really depends on the process capability.

- Use the general rules of 10% and 30% but adjust them depending upon whether you have a high capability process (e.g. Cp = 2.0), where a higher %GR&RTV might be acceptable or a low capability process (e.g. Cp = 1.0), where a lower %GR&RTV might be required. Solid engineering judgment and statistical knowledge are required adjust to these general rules.

The Effectiveness Method

- This method looks at how effective an attribute measurement system is in accepting good parts and sorting out bad parts.

- It also looks at the probability of a bad part being missed and a good part being rejected (a false alarm).

- To use this method, some bad parts or samples must be included in the analysis.

- Again, the bad parts or samples must be identifiable to the team conducting the study, but not to the appraisers being evaluated as part of the measurement system study.

- Each of the parts or samples should be evaluated multiple times by the appraisers.

- Use at least 20 parts (more is preferable).

- Two or more appraisers.

- Two or more checks per part/sample per appraiser.

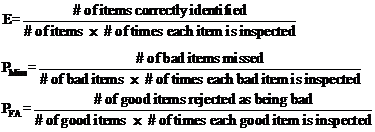

- Calculate the Effectiveness (E), Probability of a Miss (PMiss), and Probability of a False Alarm (PFA) for each appraiser and for the overall measurement system.

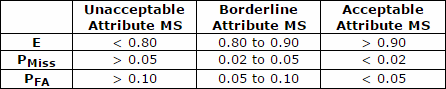

- Evaluate the attribute measurement system: